Framework

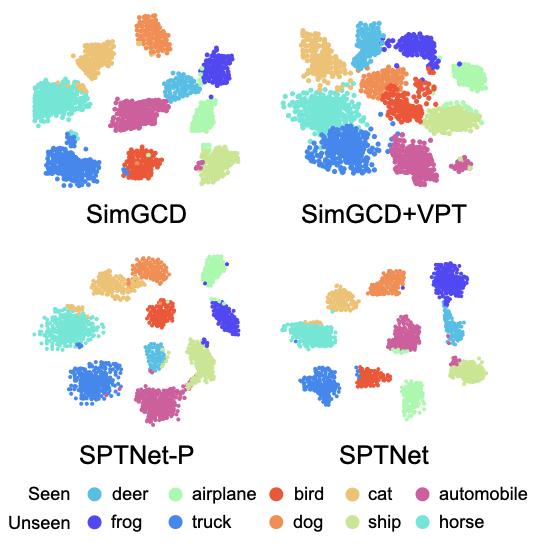

In the first stage, we attach the same set of spatial prompts to the input images. During training, we freeze the parameters of models and only update the prompt parameters.

In the second stage, we freeze prompt parameters and learn the parameters of models.With our spatial prompt learning as a strong augmentation, we aim to obtain a representation that can better distinguish samples from different classes, as the core mechanism of contrastive learning involves implicitly clustering samples from the same class together.

Different from prior works that apply only hand-crafted augmentations, we propose to consider prompting the input with learnable prompts as a new type of augmentation. The `prompted' version of the input can be adopted by all loss terms. In this way, our framework can enjoy a learned augmentation that varies throughout the training process, enabling the backbone to learn discriminative representations. Each stage optimizes the parameters for k iterations.

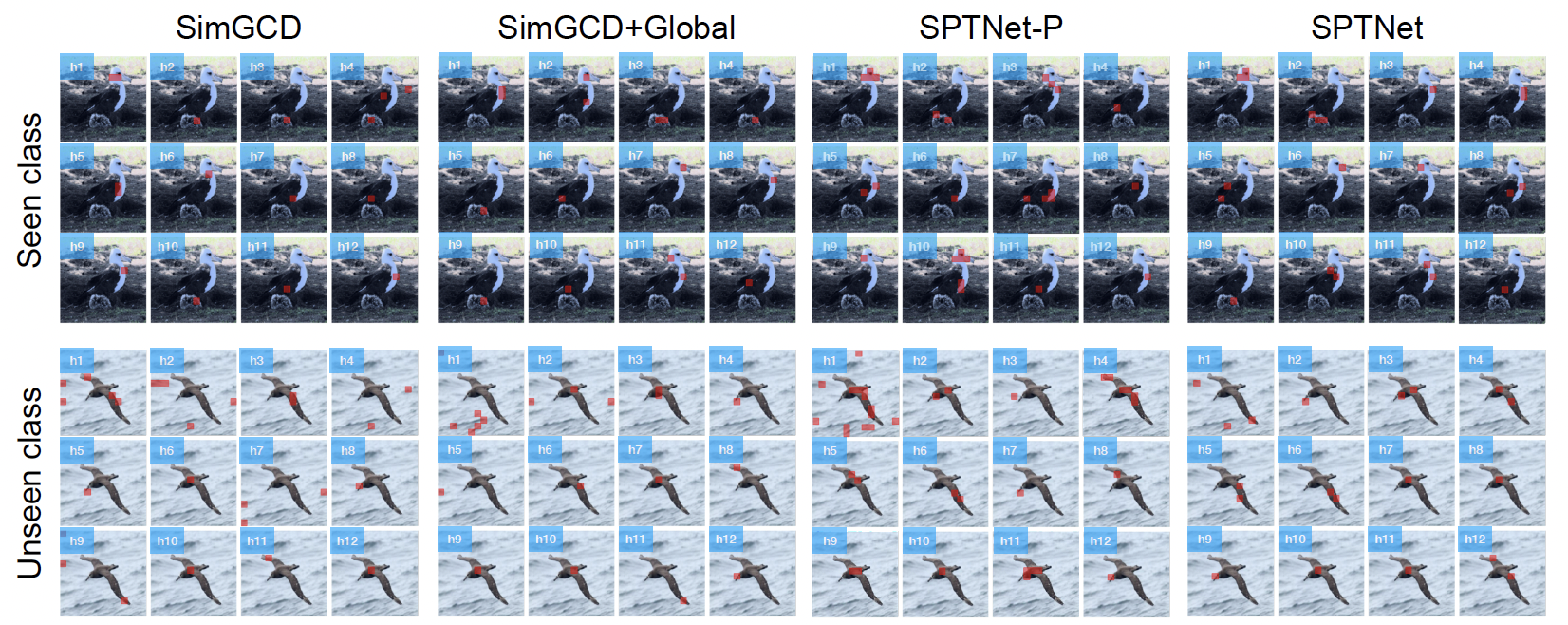

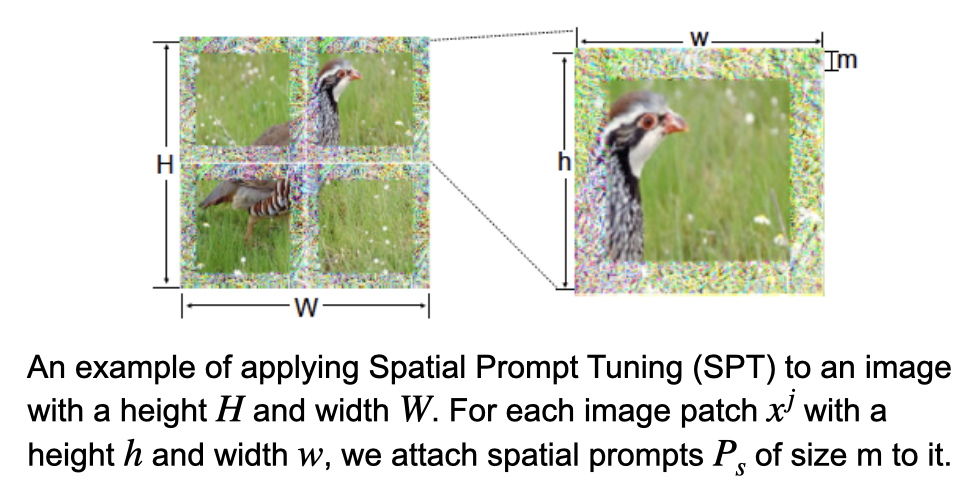

A key insight in GCD is that object parts are effective in transferring knowledge between old and new categorie. Therefore, we propose Spatial Prompt Tuning (SPT) to serve as a learned data augmentation that enables the model to focus on local image object regions, while adapting the data representation from the pre-trained ViT model and maintaining the alignment with it.